A 10-point prescription for medical & social care robotics

What obstacles need to be overcome for robots to work in health and social care? And is automating care a good idea? Chris Middleton reports.

Healthcare is one of the main hotspots for the transformative application of robotics and AI. Our bodies can be mapped in microscopic detail, as can the human genome, so robotic-assisted microsurgery and the use of AI in disease prevention, detection, diagnosis, and cure are good applications of these technologies, complementing the skills of human doctors.

Healthcare is one of the main hotspots for the transformative application of robotics and AI. Our bodies can be mapped in microscopic detail, as can the human genome, so robotic-assisted microsurgery and the use of AI in disease prevention, detection, diagnosis, and cure are good applications of these technologies, complementing the skills of human doctors.

Speaking at the World Economic Forum 2017 in Davos, Microsoft CEO Satya Nadella described AI as a “transformative force”, which will help oncologists and radiologists “use cutting-edge object recognition technology to not only do early detection of tumours, but also to predict tumour growth so that the right regimen can be applied”.

But the well-being of elderly, sick, or disabled people is a more controversial area for robotics, because of the risks of dehumanising care and appearing to put profit ahead of people. That said, a recent survey found that one-third of people over the age of 55 have already implemented smart home technologies, while the Economist Intelligence Unit has produced a report saying that people over the age of 60 are the most disruptive users of new technology.

The social care market has particular appeal in the UK and US, where 65+ populations are set to soar over the next 20 years. In the UK alone, the numbers will increase from 12 to 17 million by 2035, while in the US, 65+ citizens will rise from 15 to 24 per cent of the population by 2060. Yet despite these shifting demographics, the per capita investment in social care has fallen by one-third in real terms over the past decade. So the opportunity for the sensitive application of new technologies – including humanoid care robots, tele-robotics, tele-medicine, autonomous vehicles, assistive devices, smart domestic hubs, and more – is huge, given the shortfall of qualified staff and investment.

These are the ethical, social, and financial dimensions of helping vulnerable people to lead more independent lives. But there are technology challenges too, before advanced social-care robotics can even be considered a viable option, according to UK-RAS, the organisation for UK robotics research. Its special report, Robotics in Social Care: A Connected Care Ecosystem for Independent Living, says that advances are needed in ten key areas. These are:

Scene awareness

GPS doesn’t operate inside buildings, so AI must advance to the point where it can map and understand home environments that are designed for people, not machines. This demands the interoperation of several technologies, including: object recognition; machine learning; smart home integration; and environmental tagging, along with the flexibility to understand that human environments are subject to constant change.

Social intelligence

To a computer, a human is just a collection of pixels, despite advances in facial recognition. This is just the tip of a very big iceberg. UK-RAS says: “To be able to help people, robots first need to understand them better. This will involve being able to recognise who people are, and what their intentions are in a given situation, then to understand their physical and emotional states at that time, in order to make good judgements about how and when to intervene.”

Many humans find this difficult, so the task facing researchers is daunting – especially for those who prefer the ‘on/off, yes/no’ realm of computers to the complex, emotional world of people. Joichi Ito, head of MIT’s Media Lab in the US, has observed that many programmers prefer binary systems to the messy human alternative.

Physical intelligence

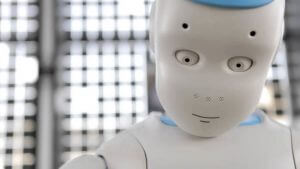

Some humanoid robots can walk, talk, climb stairs, and even run (in the case of Honda’s ASIMO), but most robots fall short of the sophistication needed for physical contact with vulnerable people. Emulating the dexterity and sensitivity of a skilled doctor, nurse, or care worker – not to mention their tact and strength when lifting or dressing patients – is a huge challenge for machines. These aren’t assembly line processes.

Safety must be paramount, and many robot designers are now moving away from replicating humanoid forms. Wheels are safer and more stable than legs in many situations, for example.

Communication

Anyone who has first-hand experience of advanced humanoid robots, such as PAL Robotics’ REEM, or Aldebaran/SoftBank’s emotion-sensing Pepper, is aware that expectation management is a major factor. The gap between what people expect from interacting with robots (intelligent one-to-one conversation) and the reality (a clever mix of programmed phrases and cues) remains a chasm.

The apparent sophistication of some robots’ speech masks an almost complete lack of understanding of what people are actually saying to each other. Most robots that have onboard intelligence respond to trigger words with a range of pre-programmed actions. Smartphone/home hub systems such as Alexa or Siri fare better and are constantly improving, but these are still a long way from natural language communication.

Natural language processing in the cloud, via AI services such as IBM’s Watson, are a partial solution, but most researchers accept that onboard or local intelligence will be vital: robots can’t rely on the stability, security, and speed of future networks. Speech recognition systems are improving, but these often fail in noisy environments, or when dealing with impaired speech, or regional accents and dialects. Just as important, human beings need to be able to understand the robot, too.

Touchscreens are increasingly common in robot design, suggesting that many machines may evolve into lttle more than smartphones on wheels.

Data security

UK-RAS says that robotics and autonomous systems will benefit from “learning offline and on the job”, based on the latest advances in machine learning. It adds: “Acquired knowledge should be transferable between robot platforms and tasks, given appropriate safeguards around data privacy.”

However, this raises some interesting questions about data protection, privacy, security, and sovereignty – especially post-GDPR. Will people have to opt in to being recognised, treated, and discussed by robots? Can they insist on being forgotten by them? And how can robotics and AI developers ensure that social/healthcare robots are safe from hackers in private residences or hospitals? These are considerations that affect all IoT devices.

Memory

Human beings make sense of the world through memory, as much as via their senses. Autonomous machines will need to do the same: robots working in social and healthcare will need memories of previous events and be able to associate them with different people’s routines and preferences. To be useful, this data will need to be classified and tagged, and retrieved via contextual and user-provided cues. Nut it will also need to conform to data protection regulations.

Autonomy and safe failure

Care robots will need to be effective 24×7. As a result, self-monitoring, self-diagnosis, and autonomous failure recovery need to be robust. When systems fail, it’s important that they can do so without putting users at risk.

Sustainability

Imagine a smartphone that weighs as much as a person and is full of electric motors. It’s little wonder that battery life is a major challenge in autonomous robots that move around and, in the case of humanoid machines, carry their own weight.

Assistive technologies will need to be designed to optimise energy efficiency, with a clear strategy for reuse and recycling, says UK-RAS. At the same time, mobile systems should be able to recharge autonomously, without the need to carry heavy, expensive, and environmentally hazardous batteries.

Dynamic autonomy

Care professionals will need the ability to override or tele-operate robots if patients are being put at risk by them. However, while variable autonomy is an emerging discipline, it is still in its infancy.

Validation and care

Future care systems will need to be tested, benchmarked, regulated, and certified to perform their tasks reliably, safely, and securely.

However, one thing missing from the UK-RAS report is the question of clinical cleanliness and disinfection. In hospitals and care homes, robots will need to prevent the spread of diseases, which may mean being able to self-disinfect. Either way, easy cleaning will be an essential design factor.

As with autonomous vehicles, the question of responsibility when a human being is harmed by a robot remains highly contentious. If someone trips over a delivery robot in the street, the legal and insurance issues remain unresolved; with medical and social care, these may be an order of magnitude more complex.

Conclusions

The idea of humans replicating themselves in machine form may be the ultimate expression of narcissism, but it serves little real-world function. There’s no logical reason why robots should take humanoid form, unless it’s necessary to carry out a task more successfully. The only other reason might be to entertain people or put them at ease, but robots frighten just as many people as they fascinate – another factor that influences their design.

To avoid this problem, many robots – such as NAO and Pepper – are designed to be ‘cute’, so that people want to take care of them. Reversing that process to create a robot that a vulnerable person is happy to be treated by is a fascinating challenge.

But one thing is clear: Doctor Robot will soon be ready to see you, and to help you live independently in your home when you retire. But what ‘he’, ‘she’, or it will look like depends on solving all of the above problems.

However, the healthcare impact of robotics is far from certain: the development costs alone are a negative factor, with some machines currently retailing at up to a quarter of a million dollars for a system that may be obsolete in two years’ time. Demand is another: Johnson & Johnson discontinued its Sedasys anaesthetic-delivery robot in 2016, due to poor sales.

So while Doctor Robot may on call, we don’t all want to make an appointment.

.chrism

• A version of this article was first published on diginomica.

• For more articles on robotics, AI, and automation, go to the Robotics Expert page.

![]() Enquiries

Enquiries

07986 009109

chris@chrismiddleton.company

© Chris Middleton 2017.