Facial recognition: Secure payment platform, or the perfect crime?

Chris Middleton asks whether technologies such as Face++ point towards a future of ultimate security and conformity, or to entirely new types of crime.

• Author’s note: Since this report was published in October 2017, one of the predictions I made has already come true. See note at the foot of this article.

In the near future, humans will be engaged in a high-stakes face-off against AI. Here’s why.

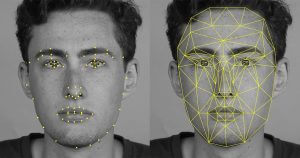

DNA and fingerprints aside, the human face is regarded as the ultimate piece of Personally Identifiable Data (PID). It’s certainly the easiest for us to use: we’re all hardwired to recognise faces, both in the real world and in 2D recordings of them, which is why a technology that’s nearly two centuries old, photography, still grants us access to offices, international travel, and more, via our passports, driving licences, and ID cards.

But the new industrial age of robotics, AI, and big data isn’t interested in 2D anymore; it wants to delve deeper into our appearance, via technologies that are similar to those used in movies to motion-capture actors’ performances.

As AI, big data, and the Internet of Things rise, 3D facial recognition technologies are the new hotspot: computers and robots are being trained to recognise people from every angle, and authentication is the key application. Soon – as in the 2002 film Minority Report – personalised services and adverts may be triggered by scans of our faces in shops and public spaces, allowing us to access transport networks, visitor attractions, and more.

In such a future, some people may choose to hide their faces or block their cameras to shut out the advertising noise, while others may happily pose for the lens to join in-store loyalty programmes or gain access to free wifi, special offers, and more. Omni-channel services will increasingly rely on being able to recognise us via the cameras on our phones, while brands may use our faces to advertise products: it will be up to us to read the Ts and Cs.

Chinese whispers

Apple and Vivo are among the companies already using facial recognition as security/login systems on mobiles, but one company has gone much further and sees the human face as the ultimate focal point for AI-enabled personalised services.

Face++ describes itself as a cognitive services provider. The billion-dollar startup and platform is owned by Chinese AI giant Megvii, whose deep-learning technologies focus in on the minute details, movements, and nuances of a face that make personal identification more reliable and secure.

Its technology has already spread far and wide. Face++ clients in China include mobile payment platform Alipay, diversified tech giant Lenovo, photo-editing apps Camera 360 and Meitu, in-car facial recognition service UCAR (used by ride-sharing app Didi, among others), dating service Jiayuan, and video-sharing platform Kuaishou. The platform is finding customers in the West, too.

Its technology has already spread far and wide. Face++ clients in China include mobile payment platform Alipay, diversified tech giant Lenovo, photo-editing apps Camera 360 and Meitu, in-car facial recognition service UCAR (used by ride-sharing app Didi, among others), dating service Jiayuan, and video-sharing platform Kuaishou. The platform is finding customers in the West, too.

The breadth of these applications reveals just how prevalent facial recognition technologies will become over the next ten years, using deep scans of faces to unlock banking, shopping, dating, travel, and other applications, replacing credit/debit cards as a secure transaction platform. Other companies are rushing to catch up, such as China’s most popular search engine, Baidu.

Service with a smile

Several retailers in China are already moving towards ‘payment with a smile’: a customer stands in front of an in-store camera, smiles, and money is deducted from their account. It’s the smile that’s the clincher in ID terms, with the subtle movements of facial muscles providing an extra layer of security and accuracy.

How this might work with angry customers is a moot point, while döppelgangers, identical twins, botox, and plastic surgery may pose further problems to the system, but deep scans are designed to uncover subtleties that aren’t obvious to the naked eye. Even so, not everyone is comfortable in front of the camera – sometimes for mental health reasons – and so any forced application of the technology may push customers away.

In vast, populous societies such as China, where ID is critical, the internet is regulated, and citizen behaviour is tightly controlled (see below), technologies like Face++ catch on more quickly than in the West, and people’s preferences are being logged en masse. Soon, Chinese consumers will be what they eat, use, and buy in the eyes of the technology platforms that are fed with citizen data, linked to their physical appearance.

Chinese fintech companies are already using social credit scoring, which links citizens’ financial status with their social media profiles and friend networks on apps such as WeChat and Taobao. In other words, some citizens are being offered or denied credit depending on who their friends are. Meanwhile, the Smart Red Cloud is an AI application developed to monitor, evaluate, and educate members of the Communist Party.

It stands to reason that, in any such big-data fuelled society, some services or products could soon be denied to individuals, too, based on their personal profiles, friends, and/or scans of their faces and bodies. Citizen behaviour controlled at the checkout: who would bet against it?

Big data meets Big Brother

Indeed, it’s already happening. In 2020, China will introduce a mandatory social credit scoring system that embraces every aspect of citizen behaviour, from shopping and financial management to personal relationships and friend networks – similar to the system envisaged in the Black Mirror episode ‘Nosedive’.

People with good ratings will be rewarded with preferential treatment everywhere they go (including greater prominence on dating sites) while those with poor social scores will be denied services and even restricted from traveling – a policy that can be seen as the ‘gamification’ of conformity. “If trust is broken in one place, restrictions are imposed everywhere,” states the policy, which will “allow the trustworthy to roam everywhere under heaven while making it hard for the discredited to take a single step”.

And at every point, this nationwide policy to impose conformity on 1.3 billion people will be linked to scans of citizens’ faces and bodies.

Some Face++ applications have obvious social or healthcare benefits. For example, ‘skin status evaluation’ is at the beta stage, says the company. It uses deep learning to detect skin problems, such as acne or early-stage cancers, and can be linked to personalised recommendations and treatments.

Body detection, body outlining, and gesture recognition are also at an advanced stage, says Face++, enabling developers to detect body shapes and behaviours from a mass of raw camera data, while ‘gaze estimation’ tracks how the human eye moves around a screen. Inevitably, this will be used to push advertising. Coupled with emotion recognition, this technology could be disruptive in countless ways, or simply intrusive. A number of companies in the West are developing similar gaze/emotion tracking systems, such as Affectiva, nViso, and Emteq.

The beauty challenge

Others applications are more troubling, and reveal how the cultural differences between societies don’t translate easily via technology platforms: AI is frequently modelled on human society, it is not somehow separate and distinct.

‘Beauty Score’ is another Face++ technology that’s at the beta stage. It applies machine learning to evaluate a person’s attractiveness, says the company, and can recommend makeup to ‘improve’ their appearance and link individuals to their ideal matches online – statements that suggest the technology will be used to objectify women, or push them towards conforming to social norms or standards set by the fashion and beauty industries. In the post-Weinstein age, such AI-enabled behaviours would not go unchallenged in the West.

The underlying problem with Face++’s Beauty Score application is that it makes implicit assumptions about what beauty is (and isn’t), and what attributes people are attracted to. This ignores the fact that the concept of beauty is subjective, mutable, local, culturally diverse, and changes constantly over time.

Will the technology reflect that diversity, or simply reflect the preferences of its designers? This is a more important question than many people realise, because it has already been demonstrated that AI tends to reflect its designers’ choice of training data when it comes to assessing human ideals, such as beauty, as this separate report explains.

One long-term effect of the application may be that people may begin to conform to machine-generated concepts of beauty, while vulnerable people may believe that they aren’t attractive enough because a machine has told them so. Eating disorders, anxiety, depression, and cyberbullying are already endemic among young people; a ‘beauty score’ seems likely to deepen these problems.

Abuses of the system

Throw more AI and predictive analytics into the mix, and it’s clear that any such system could also be open to abuse. Facial recognition and AI systems have already been used experimentally to predict if people have particular illnesses, and even to determine their sexual orientation from a photograph. While discrimination against many minority groups is illegal in some countries, algorithm-based discrimination would be much harder to detect, and could open the floodgates to invisibly automated bias within certain industries, such as life insurance.

Sadly, fears about bias, discrimination, and lack of diversity are far from scaremongering when it comes to AI, as this report reveals. For example, the Face++ system itself can be used to ‘beautify’ video footage, says the company, and one of its key algorithms is ‘skin whitening’.

The Face/off scenario

So do technologies such as Face++ point towards a future of ultimate security and personalisation? Or to a society of automated bias, conformity, and control at the checkout – a monoculture based on superficial appearances? (“I’m sorry, sir, our body scan indicates your BMI is poor, and our facial scan suggests you have diabetes: we cannot sell you this product. Alternatively, click to accept this higher life insurance premium.”)

And there’s another question: is the system foolproof? Bizarre and unlikely though it may seem, another technology may be poised to throw a spanner in the works: 3D printing*.

Real-F and Thatsmyface.com are among the companies that are enabling customers to have their own – or other people’s – faces 3D printed onto a variety of items. These include realistic, wearable masks of the kind that recall another movie, Mission Impossible. (A third Hollywood blockbuster, Face/off, featured two characters wearing each other’s faces.)

The underlying technology is exactly the same as facial recognition: a deep 3D scan of a person’s face, down to the microscopic details that add realism, but realistic masks can also be generated from multiple high-res photographs, say the companies.

Real-F, in particular, is focusing on absolute realism. The English-language version of the website says “The REALFACE looks exactly like the original face based on [a] technique called 3DPF (Three-Dimensional Photo Form). Our original 3PDF technique […] makes it possible to duplicate skin texture, the eye blood vessels and iris, and even your eye appeal exactly the same as the originals which an ordinal [sic] portrait can’t do. If you are thinking to leave a memory of yourself or your loved one, the REALFACE is the best fit.”

The company says it is developing realistic soft skin for its masks, too, and – from the examples on the website, at least – the results seem extremely realistic [see pictures].

As with Face++, some applications of 3D printing faces and other body parts could be transformative, in every sense, such as the ability to create a replacement face for someone who has been disfigured by illness or accident. Primitive versions of this technology have already been used in a handful of cases to do just that.

But how long will it be before someone uses these technologies to fool facial recognition systems by using a realistic mask of someone else’s face? And how long before someone commits a crime using a stranger’s face – perhaps yours? The idea isn’t as far-fetched as it sounds*. [See note below – CM]

It was Isaac Newton who observed that every action carries an equal and opposite reaction. He was describing classical mechanics, but the principle applies in the virtual world too, as every internet security company that is engaged in an arms race with hackers knows: threats evolve as security evolves.

On the face of it, the relentless march of AI technologies might appear to be making it harder for people to fool the system, but as this video of President Obama giving a speech proves, the same technologies can also be used to fool the public. The footage is faked using AI and CGI, and yet is extremely convincing.

So, welcome to the future. Should we look into the lens and face it with a smile? Or frown, pull up our coat collars, and walk the other way? One thing is clear: it’s time to decide.

*: This article was published in October 2017. In November 2017, The Register and other tech news outlets reported that the facial recognition system of Apple’s iPhone X had been cracked by hackers wearing a $150 3D printed mask, as I predicted. /cm

.chrism

• For more articles on robotics, AI, and automation, go to the Robotics Expert page.

• A version of this article was first published on Hack & Craft News.

![]() Enquiries

Enquiries

07986 009109

chris@chrismiddleton.company

© Chris Middleton 2017.