Chris looks at the dangerous rise of machine decision-making.

The robots aren’t coming, they’re already here. The bad news is they’re us – unless we write new rules that benefit all human society. Chris Middleton presents a personal report on what can happens when organisations leave too many decisions up to machines.

OPINON Technology is the first thing blamed by customers who are struggling to get banks, utilities, local councils, telcos, and other organisations to understand them. But the real problems are the rules, the policies, that some organisations write before paying a technology company to cast them into software as digital statements of their beliefs.

Combined with poor or incomplete data in a networked, automated world, the output of this ‘read only’ process can be a dystopian nightmare for any customer or citizen who doesn’t fit in. Later on, I’ll introduce you to just such a man; his story will shock you. And because the employees in many organisations obey the same rules that the software does – often by reading them aloud to customers from a computer screen – awkward humans can be made to disappear.

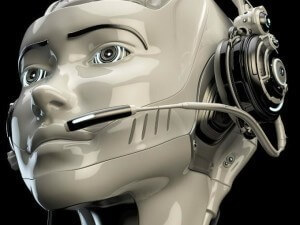

The origin of the word ‘robot’ is the Czech word robota, meaning ‘forced labour’ – coined into popular usage in Karel Čapek’s 1920 play ‘Rossum’s Universal Robots’. But while some people focus on the societal aspects of robots replacing human beings – as the False Maria robot did in Fritz Lang’s 1929 film ‘Metropolis’ – few people consider the flip side of that process: human beings behaving more and more like robots in a world of repetitive, regulated processes. That, I would argue, is where some of the real danger lies in an increasingly automated world.

Let me explain.

Rise of the robots

Recently, I had the privilege of taking part in a robotics workshop for schoolchildren – thanks to my ownership of a humanoid robot. Robotics, coding, AI, and even computer ethics are things that many of today’s primary school kids are being taught about. The UK government says that every child in that age group must know what algorithms are: the sequential, operational steps behind every piece of software. Turn left, turn right, switch on, switch off.

The good teachers who run these workshops have risen to the challenge by sharing a catchy ditty, ‘The Algorithm Song’, and getting their youngest pupils to sing it in class. By drumming the word into them via the tune of a recent hit single, the hope is that these kids will leave primary education understanding the importance of algorithms in their daily lives.

But as I will explain, we’re all singing ‘The Algorithm Song’ every day, and it’s not always to such a happy tune. And our fascination with humanoid robots is really just a minor-key refrain in what is already the soundtrack to this century: the rise of machine-based decision-making, sometimes based on increasingly antisocial rules.

But let’s stay with that robot refrain for a moment. At a Cloud Week event in Paris in July 2015, Fujitsu’s platform chief, Chiseki Sagawa, predicted that by 2025, humanoid robots will be commonplace in homes and offices. Sagawa is biased – machine-men have long been part of the cultural narrative in his home country, Japan – but that’s not to say that he’s wrong. In the same month, the first hotel staffed entirely by robots, Henn-na, opened in Nagasaki.

But let’s stay with that robot refrain for a moment. At a Cloud Week event in Paris in July 2015, Fujitsu’s platform chief, Chiseki Sagawa, predicted that by 2025, humanoid robots will be commonplace in homes and offices. Sagawa is biased – machine-men have long been part of the cultural narrative in his home country, Japan – but that’s not to say that he’s wrong. In the same month, the first hotel staffed entirely by robots, Henn-na, opened in Nagasaki.

Gimmick or not, the stated aim of the venture isn’t to increase the sum of human happiness, it is to reduce HR costs and increase efficiency, and so make the hotel’s shareholders wealthier. We could describe that as the core condition of the system. On top of that rule are written the algorithms to make it real – step-by-step operational instructions that each robot follows in pursuit of pre-defined outcomes.

Essentially, the hotel’s human guests are entering a money-making machine that offers them a place to sleep. And as the Internet of Things grows, highly automated environments like this will proliferate. In the future, 47 per cent of all jobs will be automated, according to Dr Anders Sandberg, James Martin research Fellow at Oxford University’s Future of Humanity Institute.

However, the Japanese obsession with manufacturing synthetic copies of themselves is little more than a diversion. Creating a mechanical device that can walk and talk is just a physical engineering challenge; it has little to do with the programming, intelligence, or intention behind the scenes.

Robots don’t need a human face, or any face at all. They’re already embedded in the machinery of Western society.

Of course, automation itself is not a bad thing; it was the core principle of the Industrial Revolution. In a networked, big data-driven world, the real issues today are the algorithms, along with the data that those algorithms rely on to create the desired, predestined outcomes. In that environment, systems increasingly rely on systems that rely on other systems. But what if the original algorithms behind any automation programme are based on a bad idea?

Who tells the tellers?

International banks are in the vanguard of strategic automation – investment platforms and customer service operations are just two of the key areas for digital transformation. But it’s easy to automate a bank’s customer-facing processes, because most are already controlled by software.

International banks are in the vanguard of strategic automation – investment platforms and customer service operations are just two of the key areas for digital transformation. But it’s easy to automate a bank’s customer-facing processes, because most are already controlled by software.

Anyone who has walked into their local branch this century is aware that today’s Financial Services companies are now, quite literally, software programmes fronted by human beings. Their employees – many excellent, professional people among them – follow step-by-step instructions in any scenario, and are forbidden to depart from them.

To walk into a branch to open an account, take out a mortgage, or apply for a credit card is to be talked through a series of slides by a human being. It’s purely a matter of rules and algorithms, of the customer satisfying preset conditions. Interrupt that flow to ask a question and you’re told, “I’ll just read this next slide to you.” The bank’s employees have no choice but to obey this step-by-step process.

In a sense, it’s insulting to everyone involved to give the process a human face. Whatever qualities these banks’ employees might have as imaginative, skilled, highly qualified individuals, they may as well be robots, like the ones in that Japanese hotel.

Today’s banks are giant compliance statements that sit behind a range of automated processes. These are designed to maximise remote shareholder profit, not public service. They’ve become sets of increasingly uncompromising algorithms, to which everyone – employees and customers – must comply.

This is all very well when the data that those systems rely on is accurate and if the algorithms that drive them have been conceived to increase the sum of human happiness, but what if the data feeding those systems is wrong? What if the data-gathering process is also the result of flawed algorithms? And what if the underlying rules are no longer in customers’ best interests?

Also at Cloud Week 2015 in Paris, Constellation Research’s Ray Wang talked of a Digital Bill of Rights, which would protect people’s right to opt out of digital systems and prevent them being oppressed by their own data. That’s great, but it misses two key points. First, it is increasingly difficult to opt out of digital society, unless you opt out of basic services too – including pensions, benefits, and tax. And second, it’s often not ‘our’ data that oppresses us, but someone else’s.

Ladies and gentlemen: meet one of ‘The Disappeared’.

When algorithms attack

Five years ago, someone I know moved half a mile down the road from one apartment to a bigger one, in the same town where he’d been living for a decade. A week later, he received a threatening letter from a council 100 miles away. It told him that he owed nearly £1,200 in unpaid Council Tax for a property he’d never lived in. The letter was genuine, the automated result of a computer algorithm; no human being was involved.

Five years ago, someone I know moved half a mile down the road from one apartment to a bigger one, in the same town where he’d been living for a decade. A week later, he received a threatening letter from a council 100 miles away. It told him that he owed nearly £1,200 in unpaid Council Tax for a property he’d never lived in. The letter was genuine, the automated result of a computer algorithm; no human being was involved.

Fearing identity theft, my friend phoned the council that had sent him the letter. They informed him that if he didn’t pay, they’d take steps to recover the money, such as by seizing property from his new address. He explained that he’d never even visited their town, let alone lived there, and so couldn’t possibly owe them the money.

They told him that someone with the same name as him had moved out of an address in town and disappeared, owing back-taxes. A national database flagged my friend as having moved at roughly the same time (actually some months later) and so, they said, he must be their absconding debtor. At that point, two different people, linked only by a (very) common name, became one person in the databases that govern our lives.

My friend’s age, blemish-free tax records, National Insurance number, former address just streets away, and good credit history were all irrelevant to this system – thanks to an algorithm that said “recover money quickly”. Once that instruction kicked in, other algorithms set about dismantling my friend’s financial reputation, piece by piece.

Like anyone who has found themselves trapped by poor data, his presumed and automated guilt placed all the onus on him to extricate himself from the mess. And, thanks to another algorithm, the machine-based judgement had already been logged with the credit reference agencies.

So now it was official: he was a tax-evader who had no right of appeal against his machine-generated ‘sentence’.

Banking on disaster

As bad luck would have it, my friend was about to open a bank account for his new company – a legal requirement for any new venture. At that time, he had a good income, no debts, no criminal record, a five-figure sum in his personal bank account, and a 50 per cent stake in a seafront property, having just sold his own and moved in with his partner. He also had no credit cards or store cards, preferring to buy only what he could afford (it seems almost quaint, doesn’t it?).

To people of his parents’ or grandparents’ generations, my friend would have been seen as a model of financial probity and common sense. But the core rule on which the Financial Services sector used to be based – debt bad, savings good – has been turned on its head. Debt is now good – for the shareholders that own it – as long as the debtor doesn’t abscond. Today, that debtor might be a country, such as Greece, or an undergraduate who is little more than grist to the UK’s financial mill.

This simple, but absurd, rule now underpins most Western economies. Algorithms are written based on it, and employees and customers must comply with them, because remote investors will make less money if they don’t. Everyone loses except the shareholders, and if the system fails we bail out the shareholders so we can lose all over again. We know this to be true.

But back to my unfortunate friend. According to the credit reference agencies (which are seen as holding benchmark data), and according to every bank in the UK (which are machine-based compliance systems) my friend was not only an undesirable customer who was incapable of managing his own affairs (no credit cards or store cards), but also an absconding debtor.

To any intelligent human being he was none of these things, but he was to a machine. As a result, every bank in the UK refused to open an account for the new company he had just set up. He wasn’t asking to borrow money, just to have a vanilla account so that his clients could pay him.

Let’s restate the case: a man with no criminal record, good income, prospects, clients, zero debt, £45k in the bank, and property, was refused a simple bank account with no credit facilities, thanks to an algorithm. Such a man stood less chance of opening a bank account than a criminal who had just been released from prison.

And the more he was refused the banks’ services, the more he was logged on databases as having been refused their services: a vicious, downward spiral of bad data feeding into more bad data, denying human beings any opportunity to intervene. A nightmare of cyclical compliance.

Of course, you say, he could simply have contacted the credit reference agencies and paid them to correct his records. He did, but it’s not that simple, because the onus was on him to prove the system wrong. His problems persisted for months and they linger to this day, five years later. Why? Because errors spread like a virus in a networked system.

Healing ‘patient zero’ in a case of bad data doesn’t halt the epidemic, because, with networked systems, you have to cure an infinitely recurring number of patient zeroes. More, you could argue that any credit reference system that charges money to correct its mistakes has no incentive to be accurate.

Our credit reference and ratings system is a flawed, self-serving monster, one born of a truly insane idea: the more in debt you are, the better you are at managing your finances. That idea is crippling Western economies, as the 2008-09 crash proved.

One of the disappeared

In the end, my friend was forced to close his company, which could have been an asset to the community. Having no bank account meant that he couldn’t trade; it was as simple as that. Winding up the company cost him money and lost him income, and he was forced to make other decisions that lost him more. Today, he’s penniless, and wholly reliant on the debit card that goes with his 20-year-old personal bank account.

Recently, his bank told him that they’re shutting down the type of account he uses, which will force him to apply for a new one – a process mandated by another algorithm. What then? He may be left with no banking facility at all. At the time his troubles started, one of the high street bank managers he spoke to had the good grace to explain the problem, even as my friend was showing him proof after proof of his then-excellent finances. The manager said:

“If it was up to me, sir, I’d say yes to opening the account. But the computer won’t let me.” As clear a statement of human irrelevance to the banking sector as you could imagine.

Like every employee in every bank in the Western world today, that manager’s ability to act outside of the system’s machine-based rules was no better than that of a robot. Granted, the manager had emotions, empathy, intelligence, and the other traits that make us human, but he was no more capable than a robot of acting independently – even if reason and common sense told him to. He had to comply.

So, altogether now: let’s join the primary school children in singing ‘The Algorithm Song’ that I shared earlier in this report. Because as we face the rise of machine-based decision-making in the institutions that govern our lives, we are all helpless infants.

Conclusions

- Automation is not intrinsically bad: it makes many industries and processes more efficient and cost-effective. However, too little thought is being given to the thinking behind automation, to the strategies or to the algorithms that represent them. In many cases, human beings are the least important element in some organisations’ plans.

- While a Digital Bill of Rights might protect people should they wish to opt out of digital systems, a Citizens’ Right of Appeal against automated decisions is needed when they have no choice but to opt in. This should override the interests of any organisation that denies them basic services. Human society must step in and help.

- More, organisations should stop using technology to turn their employees into robots by preventing them from using their own intuition, judgement, intelligence, empathy, and skill. They’re human beings, serving other human beings, not robots serving robots.

![]() Enquiries

Enquiries

07986 009109

chris@chrismiddleton.company

© Chris Middleton 2015, 2016